NetApp at NVIDIA GTC: Reducing AI infrastructure complexity and risk

Phil Brotherton

NVIDIA GTC is taking place April 12-16, 2021, bringing the premier artificial intelligence (AI) and deep learning conference to audiences around the globe. NetApp is a proud diamond partner of the digital event, where we’ll build momentum with several joint announcements. The NVIDIA Base Command Platform and NetApp® ONTAP® AI integrated solution give enterprises more options to access the critical AI hardware, software, and services they need. Don’t miss our cutting-edge sessions on the latest topics in enterprise AI. Register for free here.

NVIDIA GTC is taking place April 12-16, 2021, bringing the premier artificial intelligence (AI) and deep learning conference to audiences around the globe. NetApp is a proud diamond partner of the digital event, where we’ll build momentum with several joint announcements. The NVIDIA Base Command Platform and NetApp® ONTAP® AI integrated solution give enterprises more options to access the critical AI hardware, software, and services they need. Don’t miss our cutting-edge sessions on the latest topics in enterprise AI. Register for free here.

AI is a driving force for technology today; IDC predicts that spending on AI technologies will increase to $97.9 billion by 2023. AI also plays a significant role in the adoption of cloud solutions, now and in the future. Deployed fully and successfully, AI can help teams monitor and manage cloud resources and the vast amount of data available in an organization.

NetApp shares with NVIDIA a vision and history of optimizing AI’s full capabilities and business benefits for organizations of all sizes. For example, we recently teamed to create a conversational AI architecture that delivers the response times necessary to drive real business impact. With NetApp ONTAP AI, powered by NVIDIA DGX systems and NetApp cloud-connected storage, state-of-the-art language models can be trained and optimized for rapid inference. This capability is key, providing the speed to accurately understand a person’s queries and to respond quickly and accurately to complex natural-language workloads.

AI is becoming a mission-critical success factor for digital transformation in the modern enterprise. However, companies continue to face significant challenges as they attempt to deploy AI hardware and software and integrate AI throughout their operations. Over the last few years, NetApp and NVIDIA have been teaming to create innovative solutions to address these and related enterprise AI challenges, unlocking the value of AI in the enterprise while minimizing risk.

Our unique joint solutions enable new business opportunities for customers by building on NetApp capabilities in four key areas:

- The right experts and the right solutions at the right time maximize researcher efficiency.

- Advanced tools simplify model manageability, traceability, and data pipeline management.

- An optimized AI platform, software tools, performance tuning, and management of burst modes accelerate progress from proof of concept to production.

- AI environment configuration and troubleshooting using optimized compute, network, and storage for predictable performance with full support for multivendor technology stacks eliminate infrastructure and integration challenges.

NetApp at GTC 2021

At GTC 2021, we’re featuring two new joint offerings designed to help enterprises eliminate deployment and integration challenges. These solutions build on our proven ONTAP AI architecture to make high-performance AI infrastructure more easily accessible.- NVIDIA Base Command Platform offers a new, easy-to-consume and easy-to-manage AI tool with 70% better ROI than unmanaged AI environments.

- With the NetApp ONTAP AI integrated solution you can purchase preconfigured AI systems directly, streamlining on-premises deployment.

Over the last few years, NetApp has released more than 25 reference architectures to address a wide range of use cases and has won numerous AI awards. Today, we support customers running more than 125 enterprise AI projects globally—across industries from healthcare to retail to automotive—and we’re experiencing year-over-year AI business growth of 150%. For NetApp, our data fabric technologies and intense focus on AI partnerships amplify the benefits for customers running our AI platforms.

Additional NetApp highlights for GTC 2021 include the following.

Easier access to essential data science tools

- We’ve enhanced the NetApp Data Science Toolkit, our full-stack data science solution that streamlines data management tasks, building on our heritage of application-integrated data management.

- Key members of our expanding partner network, Domino Data Lab and Iguazio, are demonstrating the synergy between their data science platforms and NetApp hardware and software. This means that teams can run leading data science software with less hassle and the full suite of NetApp data management capabilities.

- Data science consulting partner SFL Scientific is on hand to discuss methods to approach high-value use cases in healthcare, retail, and beyond.

- NetApp is partnering with Lenovo to deliver training and inferencing solutions for small and midsize businesses.

- For high-end AI, HPC, and analytics, enhancements to NetApp EF-Series storage systems enable massive I/O and consistent low latency for NVIDIA DGX A100 systems using the BeeGFS parallel file system and NVIDIA Magnum IO GPUDirect.

Eliminate deployment and integration challenges

No single solution addresses the AI infrastructure needs of every enterprise. That’s why NetApp focuses on providing choice. With our new joint offerings, you now have multiple options for deploying industry-leading AI solutions. Options include building your own environment based on our proven reference architecture, as well as purchasing a preconfigured integrated solution to make it easy to consume AI your way.

NVIDIA Base Command Platform

Coming soon: the NVIDIA Base Command Platform, which combines the industry’s most powerful AI infrastructure with intelligent software tools. This new solution transforms the way that data scientists adopt high-performance AI solutions, accelerating time to value and enhancing analysis and decision making. Customers can avoid the complexities of deploying AI infrastructure in their data centers with a service that offers cloudlike consumption. Base Command Platform speeds time to AI and is easier to use than traditional on-premises deployments, helping customers to maximize the value of their AI investments.The Base Command Platform will be introduced during sessions at GTC. Together, NVIDIA and NetApp will bring this unique offering to market later in the summer. Select customers are already preparing for an initial PoC from now through the end of July.

We like the attractiveness of Base Command’s telemetry, real-time GPU usage monitoring, and quota management. These are important aspects of ML operational excellence. — Abhay Parasnis, Adobe Chief Technology Officer and Chief Product Officer, Document Cloud

To learn more about Base Command Platform:

- View Session S32762: A New Approach to AI Infrastructure

NetApp ONTAP AI integrated solution

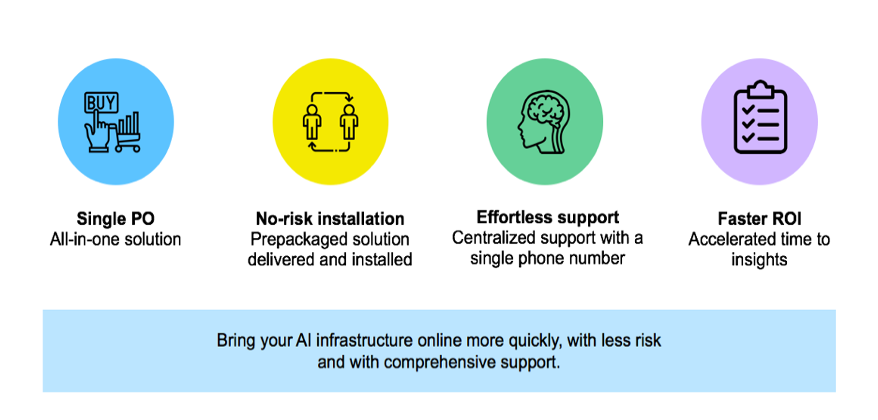

Technology integration challenges prevent many enterprises from deploying AI infrastructure on their premises. NetApp and NVIDIA have created a new integrated solution based on the field-proven NetApp ONTAP AI reference architecture to streamline data center deployment.With the NetApp ONTAP AI integrated solution, deploying AI has never been easier. If you’re struggling with AI complexity and risk—or if you’re frustrated by traditional multivendor procurement, deployment, and support—this solution is the answer. It offers a predefined, full-stack AI infrastructure that combines NVIDIA DGX A100 servers, NetApp A-Series storage, and NVIDIA Mellanox networking. Choose from three easy-to-deploy sizes (S, M, L) to meet your needs.

Standardized configuration, onsite installation, and one-stop support reduce the complexity of deployment and management. The ONTAP AI integrated solution is available through our reseller network and is configured and fulfilled by Arrow Electronics.

Standardized configuration, onsite installation, and one-stop support reduce the complexity of deployment and management. The ONTAP AI integrated solution is available through our reseller network and is configured and fulfilled by Arrow Electronics.

To find out more:

- Read the ONTAP AI integrated solution announcement blog

- View Session SS33180: Next Generation AI Infrastructure—From Reference Architectures to Fully Managed Services

- Check out our Kaon demo to learn about features

Streamlined access to essential data science tools

The best AI hardware is nothing without the right software. That’s why we’re working closely with industry leaders to validate and integrate with the most widely used solutions. And it’s why our expanding AI software ecosystem includes the NetApp AI Control Plane and the NetApp Data Science Toolkit. These in-house solutions make data management simple so that data scientists can focus on data science.For this year’s GTC, NetApp is joined by several of our leading partners, including Domino Data Lab, Iguazio, and SFL Scientific. We work closely with AI software vendors to validate and integrate the latest solutions for NetApp environments, and we’re also teaming with consulting partners to jumpstart your success.

NetApp Data Science Toolkit

As AI moves into highly regulated and sensitive industries, dataset-to-model traceability and reproducibility become essential. Traditional versioning tools such as Git are designed for code, not data. The NetApp Data Science Toolkit makes it easy for data scientists and engineers to perform necessary housekeeping tasks, simplifying data management, streamlining AI workflows, and enabling traceability. With easy-to-use interfaces and an importable library of Python functions, data scientists can rapidly provision a new data volume or almost instantaneously create a clone. This ability eliminates manual tasks and saves hours of time waiting for tedious data copies to complete. Your teams can efficiently version datasets while preserving the gold source and save NetApp Snapshot™ names in code or a model repository for complete traceability.We’re constantly adding functionality to the Data Science Toolkit. Our latest version (version 1.2) adds full Kubernetes support, allowing data scientists to provision, clone, make Snapshot copies, and delete JupyterLab workspaces or Kubernetes PVCs for backup and traceability. Data scientists can also prepopulate specific directories on a NetApp FlexCache® volume to create a high-performance AI training tier.

To learn more about the Data Science Tool Kit and NetApp’s full range of solutions:

- Read the blog NetApp AI open-source software and tools

- View Session E31919: From Research to Production—Effective Tools and Methodologies for Deploying AI Enabled Products

Domino Data Lab

Domino Data Lab

Domino Data Lab is changing the way data science teams work. Its popular Domino Data Science Platform—now validated for use with NetApp AI solutions—accelerates research and increases collaboration.

The Domino Data Science Platform, combined with NetApp ONTAP AI, centralizes data science work and infrastructure across the enterprise to give data scientists the power and flexibility to experiment and deploy models faster and more efficiently. — Thomas Robinson, VP of Partnerships at Domino Data Lab

- View Session S32190: Democratizing Access to Powerful MLOps Infrastructure

Iguazio

Iguazio

The Iguazio Data Science Platform enables enterprises to develop, deploy, and manage AI applications at scale. With Iguazio, you can run AI models in real time, deploy them anywhere (multicloud, on premises, or at the edge), and bring your most ambitious AI-driven strategies to life. The Iguazio Data Science Platform is now fully integrated with the NetApp Data Science Toolkit and validated for use with NetApp AI solutions, delivering the performance and scalability to support your most extreme workloads and enabling highly available end-to-end machine learning (ML) pipelines that process and react to massive amounts of data.

To deliver performance at scale, AI hardware and software have to work together. By integrating the Iguazio Data Science Platform with NetApp AI hardware and the NetApp Data Science Toolkit, we are ensuring that data scientists can take full advantage of NetApp’s proven performance and data management to support extreme AI workloads requiring massive amounts of data. – Asaf Somekh, Cofounder and CEO of Iguazio

SFL Scientific

SFL Scientific

SFL Scientific is a data science consulting partner focused on strategy, technology, and solving business and operational challenges with AI. SFL’s capabilities range from helping you develop your AI strategy to building custom AI applications at scale. Core services include leading cross-functional efforts across business, IT, and operations.

At SFL Scientific, we apply our consulting expertise in data science and data engineering to develop new solutions and amplify companies’ AI efforts. By partnering with NetApp—and leveraging NetApp’s proven advantages in AI hardware, software, and data management—we enable customers to significantly shorten implementation time for critical AI use cases, on premises and in the cloud. —Dr. Michael Segala, CEO & Cofounder of SFL Scientific

- View Session SS33179: COVID-19 Lung CT Lesion Segmentation & Image Pattern Recognition with Deep Learning

An expanding ecosystem of platforms to meet diverse AI needs

NetApp continues to expand its platforms to encompass diverse AI workloads and project needs, enabling customers to always find the right platform at the right cost, from edge to core to cloud. Last October, we announced validation and support for 2-, 4-, and 8-node ONTAP AI configurations using NVIDIA DGX A100, but we’re not resting on our laurels. At GTC 2021, we are discussing additional enhancements to NetApp platforms along with the contributions of key partners like Lenovo that further expand our hardware ecosystem to address more diverse needs.NetApp and Lenovo partner for affordable AI infrastructure

With the high complexity and integration costs of off-the-shelf AI and ML hardware, finding a predictable and scalable solution that minimizes operational expenses for a small to midsize business is challenging. NetApp and Lenovo are partnering to deliver cost-effective solutions to address these needs.Our partnership with NetApp combines the benefits of Lenovo ThinkSystem AI-Ready compute platforms with NetApp’s superior data management capabilities. This enables enterprise customers to accelerate AI deployments with simple, easy-to-use solutions to unlock real-time insights. These latest Lenovo and NetApp co-developed innovations deliver edge inferencing and AI training solutions at scale and within budget. — Scott Tease, General Manager, High Performance Computing and Artificial Intelligence, Lenovo Infrastructure Solutions

Edge inferencing solution. Our validated edge inferencing solution offers a simple, smart, and secure option at an affordable price. Built using enterprise-grade NetApp all-flash storage and Lenovo ThinkSystem SE350 servers incorporating the NVIDIA T4 accelerator, this solution offers affordable performance with maximum data protection. It meets demanding and constantly changing business needs by delivering seamless scalability, easy cloud connectivity, and integration with emerging applications.

High-performance AI and ML solution. This validated solution delivers scalable performance, streamlined data management, and rock-solid data protection in a scale-out architecture. Built with enterprise-grade NetApp all-flash storage and Lenovo ThinkSystem SR670 servers with NVIDIA A100 Tensor Core GPUs, this solution offers a favorable balance of performance and cost.

EF-Series enhancements for extreme scale

NetApp EF-Series AI tightly integrates DGX A100 systems, NetApp EF600 all-flash arrays, and the BeeGFS parallel file system with InfiniBand networking, delivering maximum I/O bandwidth and minimum latency for the most demanding AI and HPC needs.BeeGFS is a parallel file system that provides great flexibility, key to meeting the needs of diverse and evolving AI workloads. NetApp EF-Series storage systems supercharge BeeGFS storage and metadata services by offloading RAID and other storage tasks, including drive monitoring and wear detection.

NVIDIA Magnum IO GPUDirect enables data to move directly from the NetApp EF600 systems into GPU memory, bypassing the CPU. Direct memory access from storage to GPU relieves the CPU I/O bottleneck, increases bandwidth, decreases latency, and decreases the load on both CPU and GPU.

- View Session SS33181: Go Beyond HPC—GPUDirect Storage, Parallel File Systems and More

NetApp GTC session summary

| NetApp and NVIDIA AI infrastructure solutions | |

| Session SS33180: Next Generation AI Infrastructure—From Reference Architectures to Fully Managed Services | View session |

| Session S32762: A New Approach to AI Infrastructure | View session |

| Data science software and partner solutions | |

| Session E31919: From Research to Production—Effective Tools and Methodologies for Deploying AI Enabled Products | View session |

| Session S32190: Democratizing Access to Powerful MLOps Infrastructure (with Domino Data Lab) | View session |

| Session S32161: Large-Scale Distributed Training in Public Cloud | View session |

| Session SS33179: COVID-19 Lung CT Lesion Segmentation & Image Pattern Recognition with Deep Learning (with SFL Scientific) | View session |

| Expanded platform solutions | |

| Session: S32187: AI Inferencing in the Core and the Cloud | View session |

| Session SS33181: Go Beyond HPC—GPU Direct Storage, Parallel File Systems and More | View session |

| Session SS33178: How to Develop a Digital Architecture and Accelerate the Adoption of AI and ML Across the DoD | View session |

To learn more about the full range of NetApp AI solutions, visit netapp.com/ai and our GTC landing page.